Today the Forum on Financial Supervision at LSE’s Systemic Risk Centre published my essay, “AI and Category Errors“. In it, I pose the question: Is AI a model, software, or third party service? The answer matters because the risk management frameworks for each are quite different. Below is an excerpt.

At regulated financial institutions, models, software, and services are governed separately and subject to distinct governance and risk management frameworks. The calculations done by models are governed by model risk management (MRM) processes and supervisory expectations, like the Federal Reserve’s SR 11-7.[1] The execution done by software is governed by banks’ software development life cycle (SDLC) processes and typically overseen by IT functions. And the services purchased from vendors are governed by third party risk management (TPRM) processes and expectations.

AI systems challenge these distinctions.

For instance, is ChatGPT a model, a software application, or a service? Like models, it calculates outputs (i.e., tokens) in response to inputs (i.e., prompts). Like software, it can do things like answer questions, draft memos, write code, detect fraud, and execute a wide range of tasks via tool calls. And like services, it is purchased by banks from third parties to support and enable banking functions. In short, AI applications have features of models, software, and services.

How, then, should banks go about risk managing AI given its hybrid nature? Clearly, subjecting AI deployments to just one framework, such as model risk management, would be a category error, as that would ignore its other risks, e.g., when executing tasks like software and in its reliance on third parties. Conversely, subjecting AI to all three frameworks could also be a category error if the unique features and risks of AI, as discussed above, are not properly addressed.

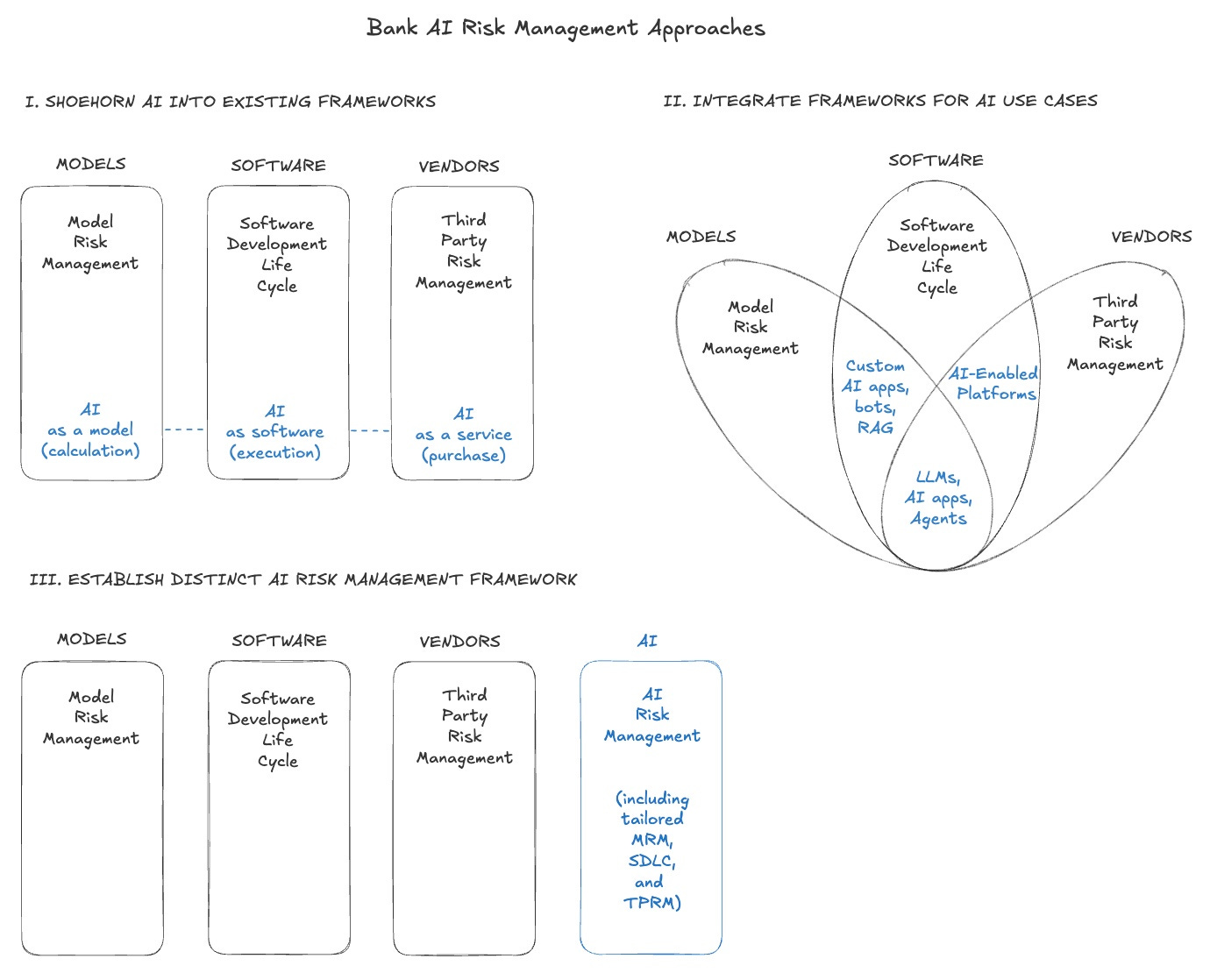

Banks must start somewhere, though, and are likely to take one of three approaches to risk managing their AI deployments.[2] The first approach is to shoehorn AI into one or more of the existing risk governance and control processes, perhaps with a coordinating mechanism, like an AI oversight committee, to bridge across silos. This is depicted in the “I. Shoehorn” part of the graphic below.

The second approach is to focus on the AI use case, acknowledge the overlap between frameworks, and then modify and integrate them. This is shown in “II. Integrate”, where specific AI deployments (in blue) straddle two or more frameworks. For instance, AI-enabled coding platforms like Cursor may implicate SDLC and TPRM while operating beyond of the reach of MRM, while customized retrieval augmented generation systems developed by a bank may implicate MRM and SDLC but not TPRM.

The third approach is to develop a distinct governance and risk management framework just for AI, grounded in first principles. As depicted in “III”, such an approach would contain elements of MRM, SDLC, and TPRM but tailored for AI.

The pace, breadth, and depth of AI adoption varies enormously across banks. As such, there is no one-size-fits-all best approach yet. Moreover, the rapidly evolving nature of AI means that the risk of category error is high. Thus, no matter which approach is taken, banks and regulators need to focus on outcomes and revisit framework decisions frequently until best practices clearly emerge.

Flexibility and agility will be required for AI risk management frameworks to be effective and remain relevant. Today, for instance, LLMs can be connected to tools that can autonomously browse and use the internet. An AI agent can be set up to receive instructions, such as “You are a professional-grade portfolio strategist. Your objective is to generate maximum return from today to 12-27-25. You have full control over position sizing, risk management, stop-loss placement, and order types”, and then autonomously research the web and enter credentials into a brokerage site to execute trades – all without any further intervention by the user.[3] Existing MRM, SDLC, and TPRM frameworks are not fit to address the risks of such a setup, which is rapidly evolving. Thus, to be effective an AI risk management framework not only has to be tailored to AI’s capabilities and risks today but must also be able to adapt as applications of the technology change over time.

[1] Federal Reserve, SR 11-7: Guidance on Model Risk Management (April 4, 2011)

[2] While the NIST AI Risk Management Framework provides a standard that is broadly accepted and recognized, including by banks, this essay focuses on practical bank risk management practices, which are generally implemented through MRM, SDLC, and TPRM processes.

[3] This prompt comes from high school student Nathan Smith’s experiment to see if ChatGPT can beat the Russell 2000 index by buying and selling small cap stocks. Smith started with $100 on June 27, 2025. As of August 29, Smith’s portfolio is up 26.9% compared to the index’s 4.8%. See Smith’s GitHub Repo for details.

Leave a comment